The Humidity of Lies

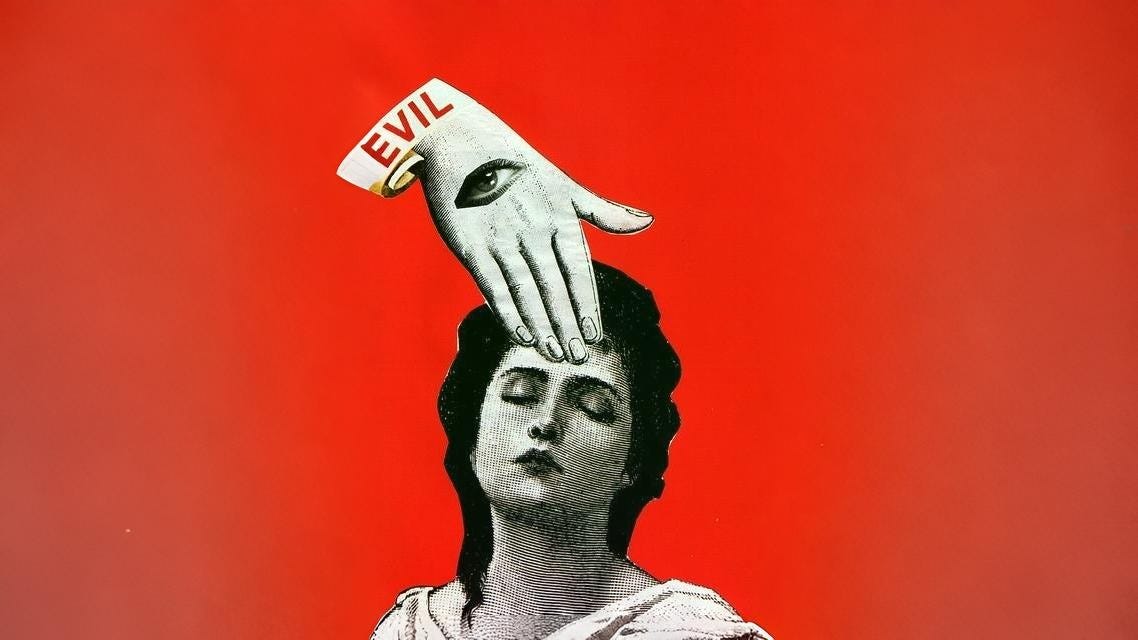

A word on propaganda

My grandfather collected stamps. Odd little rectangles from countries that no longer exist, their borders redrawn by wars and treaties, their leaders airbrushed out of photographs. He kept them in albums he never showed anyone. Once, when I was twelve, he let me look. “This one,” he said, pointing to a faded portrait of some completely unsusceptible looking guy, “this man ordered the deaths of millions of people. And this one next to him? Same. And the idiots who licked these stamps and pressed them on their letters home? They knew. Of course they knew.” He closed the album. “Knowing changes nothing.” Then he put Stalin back on the shelf.

I still think about that often.

If you appreciate my articles, please consider giving them a like. It’s a simple gesture that doesn’t cost you anything, but it goes a long way in promoting this post, combating censorship, and fighting the issues that you are apparently not a big fan of.

Edward Bernays, Sigmund Freud’s nephew, gave us permission to stop using that terrible word after the Nazis made it unsavory: propaganda. He replaced it with “public relations” and called the underlying technique “the engineering of consent.”

In his 1928 book—titled, with admirable bluntness, Propaganda—he wrote that “the conscious and intelligent manipulation of the organized habits and opinions of the masses is an important element in democratic society.” Some think he was warning us, but he was actually just advertising his services.

Bernays is why women smoke. He orchestrated the “Torches of Freedom” campaign in 1929, hiring debutantes to light cigarettes during the Easter parade and positioning it as feminist liberation. He’s why Americans eat bacon for breakfast (and die from heart attacks)—another campaign, this one for the pork industry. He’s why Guatemala’s democracy was overthrown in 1954; he ran the publicity campaign that branded President Arbenz a communist threat, clearing the way for the CIA coup that protected United Fruit Company’s plantations. The term “banana republic” originated from that arrangement.

I read Bernays in agency training. We all did. His insight was simple: appeal not to the rational mind but to the unconscious. Don’t sell products; sell emotions. Don’t argue; associate. The hand reaches for the shelf before the thinking begins. We called this “behavioral automation”. Academics call it the mere exposure effect. Bernays just called it Tuesday.

By the 1970s, over 400 American journalists were on the CIA’s payroll through Operation Mockingbird—anchors, editors, columnists, people the country trusted. They weren’t just whispering in reporters’ ears. Some of them were writing the actual stories. Some were the newscasters. The program didn’t need to convince anyone of anything. It just needed to control which questions got asked and which didn’t.

COINTELPRO ran parallel operations domestically. The FBI infiltrated lawful organizations, spread misinformation, blackmailed targets, conducted warrantless surveillance. Martin Luther King Jr. was a primary target.

By March 1964, Hoover’s campaign against him was on “total war footing,” employing what internal memos described as “covert political warfare.” Reverend Jesse Jackson later described the effect: “When you have this feeling that the government really is watching you... it has a chilling effect. It takes away your freedom. And often for leaders, none of us are perfect, it neutralizes people.”

Neutralization. That was the goal. Not conversion. Not belief. Neutralization.

The distinction matters more than anything else I learned in those years working for the government.

Here is what nobody tells you about propaganda, what the documentaries miss, what the academics who study it from the outside perpetually fail to grasp: the lies are not the hard part.

The lies are obvious. Everyone knows they’re lies. The officials delivering them know. The journalists transcribing them know. The citizens receiving them know. This shared knowledge creates an atmosphere of permanent performance, an unspoken agreement that we will all pretend together because pretending is easier than the alternative.

What exactly would the alternative even look like? Who has the energy?

The hard part is the exhaustion.

I’ve sat in rooms where we discussed “information saturation strategies.” The premise was elegantly simple: a population that is overwhelmed cannot effectively resist. Not because they believe the false information, but because they lose the capacity to distinguish it from anything else. The cognitive load of maintaining skepticism toward everything eventually becomes unsustainable. People give up. They retreat into private life. They stop participating in the public sphere.

This is the goal. Not belief. Compliance without belief. Silence dressed as consent.

The Tavistock Institute figured this out in the 1920s, ostensibly while treating shell-shocked soldiers. What they actually discovered was that trauma doesn’t just break people—it makes them pliable. Grief, confusion, fear: these function as tools. Once you know which lever makes a herd turn into a stampede, you don’t need speeches or ballots anymore. You just pull the lever.

After World War II, Tavistock’s fingerprints appeared on NATO, Cold War propaganda, the birth of mass advertising. The research continued. The methods refined. By the time I entered government service, the institutional knowledge was mature and comprehensive. We had playbooks. We had metrics. We had case studies stretching back decades.

Modern PSYOP—Psychological Operations—is remarkably bureaucratic. The U.S. Army trains soldiers specifically for “Military Information Support Operations,” which involves “sharing specific information to foreign audiences to influence the emotions, motives, reasoning, and behavior of governments and citizens.” The job posting is on the Army’s recruitment website, if you want to read it. They offer accelerated promotion. They emphasize that PSYOP NCOs “out-pace their peers.”

None of this is secret. It’s just boring. And boring is the best camouflage.

A colleague once explained the operational philosophy to me using an analogy I’ve never forgotten. “Think of it like humidity,” he said. “You don’t notice humidity. You can’t see it. But it affects everything—how you sleep, how you think, how your joints feel in the morning. Propaganda works the same way. It’s not the thunderstorm. It’s the moisture in the air that’s always there, shaping what feels possible without you ever consciously noticing.”

This is why Western people consistently misunderstand propaganda. They look for the thunderstorm. They want to identify specific lies, debunk specific claims, trace specific disinformation campaigns. But the sophisticated operators don’t work that way. They work on the humidity. They work on what feels normal. They work on the background assumptions that people don’t even recognize as assumptions.

I spent three years on a project analyzing media environments in what we politely called “partner nations.” The most effective influence operations we studied never tried to convince anyone of anything specific. They simply flooded the information space with noise until signal became impossible to detect. They amplified division wherever division already existed. They made certainty feel naive and cynicism feel wise. They didn’t need to win arguments. They needed to make argument itself feel pointless.

In 2012, the Smith-Mundt Modernization Act quietly removed the prohibition on domestic dissemination of materials produced for foreign audiences. The significance of this change went largely unreported. The infrastructure built to influence foreign populations could now, legally, reach American citizens.

A friend of mine teaches high school history. Good teacher, genuinely cares about critical thinking and media literacy. Last year she assigned her students a project: identify propaganda in contemporary media, explain how it works, propose how citizens might resist it.

The projects were impressive. The students found examples everywhere—political ads, corporate PR, social media influence campaigns. They dissected techniques; Emotional manipulation. False dichotomies. Appeal to authority. Bandwagon effects. They knew the vocabulary. They could spot the moves.

Then she asked them about their own media consumption. Their own beliefs. Their own assumptions.

Silence.

“Well, that’s different,” one student finally said. “The things I believe are actually true.”

My friend called me that evening, audibly shaken. “They can see it everywhere except in themselves. Every single one of them. And the scarier thing? I’m not sure I’m any different.”

This is the clearing that doesn’t exist. The place you imagine you’re standing when you analyze propaganda from a safe distance. The conviction that you would obviously recognize manipulation when it targeted you. That belief is itself the product of the humidity you don’t notice.

When algorithms now serve as the primary distribution mechanism, they don’t need human operators anymore. They learn what spikes your cortisol, what makes you flinch, what makes you angry. They feed you more until your nervous system belongs to them. The dosage is measured in notifications and headlines and videos that pause your scroll for just a second longer than the last one.

The pharmacy is in your brain. All your own neurochemicals, weaponized against you.

I watched this transition happen from the inside. The crude techniques I learned—message repetition, emotional triggering, strategic ambiguity—have been automated and optimized at a scale Bernays couldn’t have imagined. The machine doesn’t sleep. The machine doesn’t get tired. The machine learns what you respond to and gives you more of it until responding is all you do.

Silence is not the opposite of propaganda. Silence is its goal.

Not the silence of peace. The silence of exhaustion. The silence of having processed so many contradictions that responding feels pointless. The silence of private cynicism paired with public compliance—that distinctly modern form of spiritual surrender where you know everything and do nothing.

In every country I studied, this silence was the success metric. Not enthusiasm. Not belief. A population that has stopped expecting truth, stopped demanding accountability, stopped participating in collective decision-making. A population that scrolls.

The propaganda didn’t convince them. It emptied them. And an empty public is a stable public.

There’s a study from the behavioral research literature that haunted me for years. Researchers showed subjects an obviously false claim. The subjects correctly identified it as false. Then the researchers showed them the claim again. And again. And again.

By the seventh exposure, many subjects rated the claim as “probably true.”

Nothing had changed about the claim. No new evidence had been offered. The repetition itself created a feeling of familiarity, and familiarity masquerades as truth. The brain takes the path of least resistance. Recognizing something feels like understanding it. Ease of processing feels like accuracy.

We called this “truth by repetition” in our training materials. The technique is older than any of us. But the delivery mechanism—every screen, every notification, every algorithmically optimized piece of content—has made it infinitely more powerful.

My grandmother survived the Nazi regime. She never talked about it directly. Near the end, she gave me advice I didn’t understand until much later.

“Keep something true,” she said. “One small true thing. A memory, a relationship, a practice. Something they can’t touch. Water it in secret. Never speak of it in public. Let them have everything else if you must. But keep one thing true.”

I asked her why.

“So you remember what truth feels like. Otherwise you forget. You think you won’t, but you do. And once you forget, you become them. Simply because there’s nothing left that disagrees.”

The question isn’t whether propaganda works.

The question is: what does “working” mean? If success requires belief, then most propaganda fails. Nobody believes. Not really. Not the officials, not the journalists, not the citizens.

But if success means compliance without belief, exhaustion instead of conversion, silence instead of agreement—then propaganda has never been more successful than it is right now.

We are not being convinced. We are being depleted.

I know because I helped design the systems. I know because I read the metrics. I know because I sat in the rooms where we measured success by what they stopped doing and not by what they started believing.

The human brain is incredibly primitive. And it hasn’t evolved for millennia. And that’s why you can always repeat history. Always.

How you can support my writing:

Restack, like and share this post via email, text, and social media

Thank you; your support keeps me writing and helps me pay the bills. 🧡

The exhaustion is real. Thank you for putting it down here.

This is So illuminating. It helps me understand why the two political ideologies are so extreme now. It begs the question, who is really behind it on a global level? And what's the end result? We live in a new era, The Misinformation Age.